In the collaborative process of content creation, few things are as frustrating as the “Feedback Void”—the moment when a director describes a sound as “energetic but not aggressive,” only for the editor to return with something entirely different. Words are often too blunt for the nuance of audio, leading to endless revision cycles that drain both time and morale. When a team lacks a common “auditory anchor,” the project stalls in a state of subjective debate. Musick AI serves as the linguistic bridge in these high-pressure moments, utilizing a professional AI Music Maker to transform abstract adjectives into immediate, hearable prototypes that unify the team’s aesthetic vision instantly.

I. Who Is Musick AI in Your Workflow?

Instead of viewing Musick AI as a replacement for a composer, it is more accurate to define it as a cognitive bridge. It occupies the chaotic space where a visual idea exists but a corresponding sound has yet to be found. By operating entirely within a web browser, it removes the technical “gatekeeping” of traditional production, allowing non-musicians to participate in the sonic creative process.

The tool’s primary reason for existence is to provide a “common ground” for decision-making. In the early stages of a project, the goal isn’t to produce a final, professional master; it is to determine if a specific rhythm or instrument choice supports the narrative. By generating a high-fidelity reference track from a simple text prompt, it allows creators to “hear” their feedback in real-time. This shifts the team’s dynamic from guessing what a word like “cinematic” means to evaluating a concrete audio sample. It is a layer of collaborative infrastructure that ensures no project is delayed by the limitations of verbal description.

II. What Musick AI Can Do

The utility of the tool is centered on high-speed generation based on specific user inputs. It processes text descriptions to output synchronized audio files.

- Text to Music: Converts descriptive prompts into structured audio files containing melodic and harmonic content.

- Instrumental Focus: Generates background layers without vocal interference, suitable for video underscores or technical beds.

- Song Structure & Mood Customization: Allows users to define the mood and progression of the piece to match specific narrative beats.

- Multiple Music Genres: Supports a wide range of musical styles, from cinematic scores to electronic textures, without requiring genre-specific hardware.

III. Four Steps to Realize Your Sonic Concept

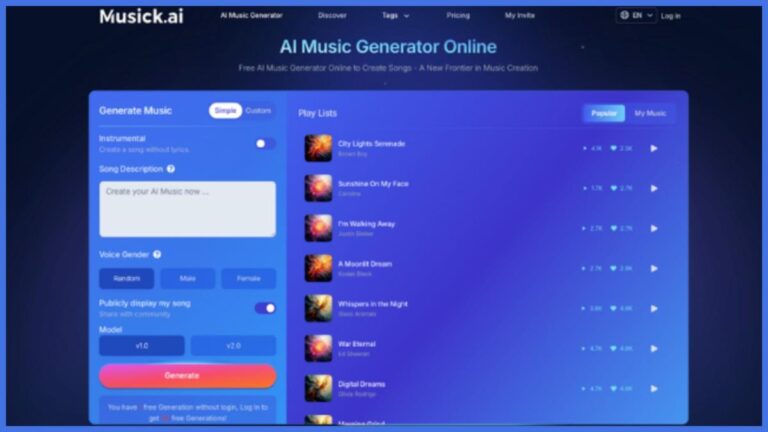

The journey from a blank screen to a preview file follows a linear, logical progression through the browser-based interface. After accessing the AI Music Generator, users follow four primary steps to realize their audio concept:

- Select Generation Mode and Instrumental Toggle: Choose between “Simple” or “Custom” modes at the top of the “Generate Music” panel and enable the “Instrumental” switch if the project requires a track without lyrics.

- Input Lyrics or Song Description: In “Simple” mode, enter a general theme in the “Song Description” box; in “Custom” mode, provide specific text in the “Lyrics” area to guide the vocal output.

- Define Style, Title, and Model: Use the “Style of Music” field to input descriptive tags like “violin” or “rawstyle,” assign a “Title,” and select a processing engine—such as “v1.0” or “v2.0″—to determine the final audio quality.

- Execute and Review: Click the “Generate” button to start the rendering process, then find and evaluate the output in the “Play Lists” section to determine if the track meets your project’s needs.

V. Who Is This Strategy For?

This methodology serves those who operate under the weight of “The 15-Minute Deadline.”

- Creative Directors: Those who need to align a team quickly use the tool to create “audio mood boards.” By generating a track in seconds, they can confirm if everyone is on the same page before high-level production begins.

- Agency Producers: For those presenting to clients, having a generated reference track eliminates the ambiguity of verbal descriptions, significantly reducing the risk of a rejected final score.

- Social Media Managers: Users who need a consistent “sonic brand” can use the tool to generate variations of a specific theme, ensuring their content feels unified across multiple platforms.

- Independent Filmmakers: Small crews use the tool to create temp tracks during the rough cut phase, allowing them to test the emotional impact of a scene without waiting for an external composer.

VI. How the Models Compare

| Dimension | Traditional Music Production | Musick AI Model |

| Time-to-Market | Days to weeks per iteration | Seconds to minutes |

| Technical Barriers | High (Requires DAW knowledge) | Low (Web-based/Natural language) |

| Creative Friction | High setup and technical overhead | Minimal; focus is on the prompt |

| Capital Investment | High (Software/Hardware/Talent) | Usage-based access |

Traditional production is limited by technical labor. The high cost of changing a track’s direction often prevents experimentation. Musick AI removes this barrier by making the cost of iteration near zero. Here, the user’s role moves from manual labor to high-level curation. This model prioritizes the ability to test multiple sonic directions in minutes, ensuring that creative decisions are based on audible results rather than theoretical guesses.

VII. What Musick AI Still Can’t Do

It is vital to recognize the current technical ceiling of this technology. Precision in micro-emotional shifts remains a human domain. While the tool can generate a “sad” or “energetic” track, the subtle crescendo of a live violin or the intentional hesitation of a human pianist cannot be fully replicated.

Furthermore, the boundary of personalization is fixed. The system operates on patterns and cannot “know” a brand’s unique sonic identity unless it is described perfectly. Finally, the role of the human remains central: the software provides options, but the final judgment of quality and fit belongs solely to the user. It is a generator, not a curator.

VIII. Conclusion

The shift in music creation is moving away from a model of total manual control toward one of high-level curation. As the cost of generating a “first draft” drops toward zero, the value of the human ear—the ability to recognize what works—becomes the primary skill. Tools like Musick AI do not aim to finish the masterpiece; they aim to ensure that the masterpiece actually gets started. By removing the friction of the initial load, the creative process remains fluid, fast, and focused on the result.

Stop guessing and start listening. Visit Musick AI today to turn your creative feedback into audible reality and unify your team’s sound.